- Record: found

- Abstract: found

- Article: found

Electronic health record data quality assessment and tools: a systematic review

Read this article at

Abstract

Objective

We extended a 2013 literature review on electronic health record (EHR) data quality assessment approaches and tools to determine recent improvements or changes in EHR data quality assessment methodologies.

Materials and Methods

We completed a systematic review of PubMed articles from 2013 to April 2023 that discussed the quality assessment of EHR data. We screened and reviewed papers for the dimensions and methods defined in the original 2013 manuscript. We categorized papers as data quality outcomes of interest, tools, or opinion pieces. We abstracted and defined additional themes and methods though an iterative review process.

Results

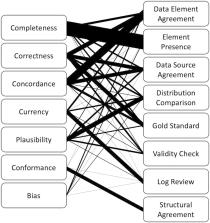

We included 103 papers in the review, of which 73 were data quality outcomes of interest papers, 22 were tools, and 8 were opinion pieces. The most common dimension of data quality assessed was completeness, followed by correctness, concordance, plausibility, and currency. We abstracted conformance and bias as 2 additional dimensions of data quality and structural agreement as an additional methodology.

Discussion

There has been an increase in EHR data quality assessment publications since the original 2013 review. Consistent dimensions of EHR data quality continue to be assessed across applications. Despite consistent patterns of assessment, there still does not exist a standard approach for assessing EHR data quality.

Related collections

Most cited references123

- Record: found

- Abstract: found

- Article: found

The PRISMA 2020 statement: an updated guideline for reporting systematic reviews

- Record: found

- Abstract: found

- Article: found

Methods and dimensions of electronic health record data quality assessment: enabling reuse for clinical research

- Record: found

- Abstract: found

- Article: not found