- Record: found

- Abstract: found

- Article: found

Deep learning–based MR‐to‐CT synthesis: The influence of varying gradient echo–based MR images as input channels

Read this article at

Abstract

Purpose

To study the influence of gradient echo–based contrasts as input channels to a 3D patch‐based neural network trained for synthetic CT (sCT) generation in canine and human populations.

Methods

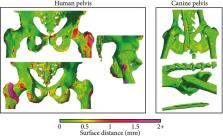

Magnetic resonance images and CT scans of human and canine pelvic regions were acquired and paired using nonrigid registration. Magnitude MR images and Dixon reconstructed water, fat, in‐phase and opposed‐phase images were obtained from a single T 1‐weighted multi‐echo gradient‐echo acquisition. From this set, 6 input configurations were defined, each containing 1 to 4 MR images regarded as input channels. For each configuration, a UNet‐derived deep learning model was trained for synthetic CT generation. Reconstructed Hounsfield unit maps were evaluated with peak SNR, mean absolute error, and mean error. Dice similarity coefficient and surface distance maps assessed the geometric fidelity of bones. Repeatability was estimated by replicating the training up to 10 times.

Results

Seventeen canines and 23 human subjects were included in the study. Performance and repeatability of single‐channel models were dependent on the TE‐related water–fat interference with variations of up to 17% in mean absolute error, and variations of up to 28% specifically in bones. Repeatability, Dice similarity coefficient, and mean absolute error were statistically significantly better in multichannel models with mean absolute error ranging from 33 to 40 Hounsfield units in humans and from 35 to 47 Hounsfield units in canines.

Related collections

Most cited references31

- Record: found

- Abstract: found

- Article: not found

MR-based synthetic CT generation using a deep convolutional neural network method.

- Record: found

- Abstract: found

- Article: found

A review of substitute CT generation for MRI-only radiation therapy

- Record: found

- Abstract: found

- Article: not found