- Record: found

- Abstract: found

- Article: found

Multimodal deep learning from satellite and street-level imagery for measuring income, overcrowding, and environmental deprivation in urban areas

Read this article at

Abstract

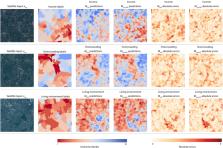

Data collected at large scale and low cost (e.g. satellite and street level imagery) have the potential to substantially improve resolution, spatial coverage, and temporal frequency of measurement of urban inequalities. Multiple types of data from different sources are often available for a given geographic area. Yet, most studies utilize a single type of input data when making measurements due to methodological difficulties in their joint use. We propose two deep learning-based methods for jointly utilizing satellite and street level imagery for measuring urban inequalities. We use London as a case study for three selected outputs, each measured in decile classes: income, overcrowding, and environmental deprivation. We compare the performances of our proposed multimodal models to corresponding unimodal ones using mean absolute error (MAE). First, satellite tiles are appended to street level imagery to enhance predictions at locations where street images are available leading to improvements in accuracy by 20, 10, and 9% in units of decile classes for income, overcrowding, and living environment. The second approach, novel to the best of our knowledge, uses a U-Net architecture to make predictions for all grid cells in a city at high spatial resolution (e.g. for 3 m × 3 m pixels in London in our experiments). It can utilize city wide availability of satellite images as well as more sparse information from street-level images where they are available leading to improvements in accuracy by 6, 10, and 11%. We also show examples of prediction maps from both approaches to visually highlight performance differences.

Graphical abstract

Highlights

-

•

Our model utilizes information from street-level and satellite images.

-

•

Proposed multimodal measurement approaches outperform unimodal ones.

-

•

The model can deal with missing data during training and predictions.

-

•

Multimodal frameworks can incorporate additional modalities (e.g. aerial images).

-

•

Applications can be expanded to different outcomes.

Related collections

Most cited references62

- Record: found

- Abstract: not found

- Article: not found

ImageNet Large Scale Visual Recognition Challenge

- Record: found

- Abstract: found

- Article: not found

Combining satellite imagery and machine learning to predict poverty

- Record: found

- Abstract: found

- Article: not found