- Record: found

- Abstract: found

- Article: not found

Self-attention CNN for retinal layer segmentation in OCT

Read this article at

Abstract

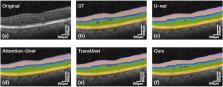

The structure of the retinal layers provides valuable diagnostic information for many ophthalmic diseases. Optical coherence tomography (OCT) obtains cross-sectional images of the retina, which reveals information about the retinal layers. The U-net based approaches are prominent in retinal layering methods, which are usually beneficial to local characteristics but not good at obtaining long-distance dependence for contextual information. Furthermore, the morphology of retinal layers with the disease is more complex, which brings more significant challenges to the task of retinal layer segmentation. We propose a U-shaped network combining an encoder-decoder architecture and self-attention mechanisms. In response to the characteristics of retinal OCT cross-sectional images, a self-attentive module in the vertical direction is added to the bottom of the U-shaped network, and an attention mechanism is also added in skip connection and up-sampling to enhance essential features. In this method, the transformer’s self-attentive mechanism obtains the global field of perception, thus providing the missing context information for convolutions, and the convolutional neural network also efficiently extracts local features, compensating the local details the transformer ignores. The experiment results showed that our method is accurate and better than other methods for segmentation of the retinal layers, with the average Dice scores of 0.871 and 0.820, respectively, on two public retinal OCT image datasets. To perform the layer segmentation of retinal OCT image better, the proposed method incorporates the transformer’s self-attention mechanism in a U-shaped network, which is helpful for ophthalmic disease diagnosis.

Related collections

Most cited references26

- Record: found

- Abstract: not found

- Book Chapter: not found

U-Net: Convolutional Networks for Biomedical Image Segmentation

- Record: found

- Abstract: not found

- Conference Proceedings: not found

Fully convolutional networks for semantic segmentation

- Record: found

- Abstract: not found

- Article: not found