- Record: found

- Abstract: found

- Article: found

Empirical assessment of published effect sizes and power in the recent cognitive neuroscience and psychology literature

Read this article at

Abstract

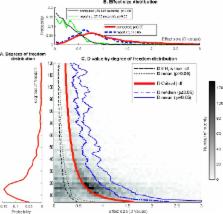

We have empirically assessed the distribution of published effect sizes and estimated power by analyzing 26,841 statistical records from 3,801 cognitive neuroscience and psychology papers published recently. The reported median effect size was D = 0.93 (interquartile range: 0.64–1.46) for nominally statistically significant results and D = 0.24 (0.11–0.42) for nonsignificant results. Median power to detect small, medium, and large effects was 0.12, 0.44, and 0.73, reflecting no improvement through the past half-century. This is so because sample sizes have remained small. Assuming similar true effect sizes in both disciplines, power was lower in cognitive neuroscience than in psychology. Journal impact factors negatively correlated with power. Assuming a realistic range of prior probabilities for null hypotheses, false report probability is likely to exceed 50% for the whole literature. In light of our findings, the recently reported low replication success in psychology is realistic, and worse performance may be expected for cognitive neuroscience.

Author summary

Biomedical science, psychology, and many other fields may be suffering from a serious replication crisis. In order to gain insight into some factors behind this crisis, we have analyzed statistical information extracted from thousands of cognitive neuroscience and psychology research papers. We established that the statistical power to discover existing relationships has not improved during the past half century. A consequence of low statistical power is that research studies are likely to report many false positive findings. Using our large dataset, we estimated the probability that a statistically significant finding is false (called false report probability). With some reasonable assumptions about how often researchers come up with correct hypotheses, we conclude that more than 50% of published findings deemed to be statistically significant are likely to be false. We also observed that cognitive neuroscience studies had higher false report probability than psychology studies, due to smaller sample sizes in cognitive neuroscience. In addition, the higher the impact factors of the journals in which the studies were published, the lower was the statistical power. In light of our findings, the recently reported low replication success in psychology is realistic, and worse performance may be expected for cognitive neuroscience.

Related collections

Most cited references32

- Record: found

- Abstract: found

- Article: not found

Puzzlingly High Correlations in fMRI Studies of Emotion, Personality, and Social Cognition.

- Record: found

- Abstract: found

- Article: not found

The Rules of the Game Called Psychological Science.

- Record: found

- Abstract: found

- Article: not found